A lot has happened in data and tech lately: AWS launched new analytics tools, dbt and Fivetran joined forces, and everyone seems to be experimenting with MCP and agentic workflows.

I’ve collected the releases, articles, and discussions from the past six months that I found genuinely useful as a data engineer. If you’re building data platforms or writing production code, hopefully you’ll find something worthwhile here too.

AWS

- S3 tables - AWS’s new storage option optimized for analytics workloads. Purpose built for tabular data and supports Apache Iceberg format.

- Sagemaker lakehouse - Provides unified access across various AWS services (S3, Redshift etc). AWS is clearly targeting customers with data platform needs, and this is a significant step toward fulfilling them.

- Kiro (agentic IDE) - AI IDE from AWS. Brings spec-driven development. At the time of the release I tried it for smaller tasks. Not too bad, but right now I think there are better options.

Databricks

- Databricks Apps - Help you build data and AI apps. It’s well integrated within AWS. Easy to get mini apps running quickly. I tried it early after release and was surprised by how few issues I encountered. I’ve had worse experiences using Databricks products shortly after their release but it was a pleasant surprise.

dbt

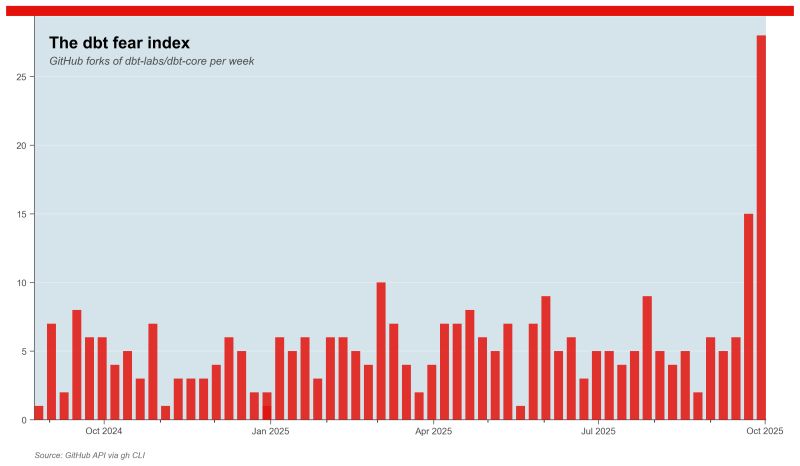

- Fivetran merges with dbt - After acquiring SQLMesh what many people feared actually happened: Fivetran merged with dbt. I’ll be interested to see how it will work out for them as I believe much of the dbt community isn’t a big fan of Fivetran

- dbt Launch Showcase 2025 Recap

- dbt fusion engine - New dbt engine that’s faster and will be the heart of most future dbt releases.

- DBT extension + MCP

- dbt ecosystem at blablacar - A very good template for dbt projects! Includes most tools found useful on dbt projects and some more.

AI/LLM

- 2 years of using AI - Nice overview of how the AI landscape changed over time.

- Video - Pragmatic Eng: SWE with LLMs in 2025

- AI coding slowdown: Research shows AI tools slow us down instead of speeding up - Research suggests people are actually 19% slower with AI. One outlier is the person who already had some experience using Cursor, he was able to achieve nearly 40% improvement. Unsure if this research means a lot, but my takeaway is: there’s a learning curve to using LLMs effectively."

- Pragmatic Eng. Newsletter’s take on this topic

- How AI is changing software engineering at Shopify with Farhan Thawar

- Adopting Claude Code: Riding the Software Economics Singularity (Superset’s experience with CC) - I’m never sure how much of these articles is PR, but I do agree with one line: “Code review became a different sport.”

- Vibe-coding to vibe engineering

-

what should we call the other end of the spectrum, where seasoned professionals accelerate their work with LLMs while staying proudly and confidently accountable for the software they produce?

-

I’m increasingly hearing from experienced, credible software engineers who are running multiple copies of agents at once, tackling several problems in parallel and expanding the scope of what they can take on. I was skeptical of this at first but I’ve started running multiple agents myself now and it’s surprisingly effective, if mentally exhausting!

-

- Agentic AI for Dummies

MCP

- MCP is eating the world and it’s here to stay - I agree, it’s one of the most impactful innovations of the past few months. New kid on the block is Claude Skills which I haven’t tried yet, but people seem to be excited about it.

- Model Context Protocol Explained

- DBT extension + MCP

- MCP Servers github repo - Everyone is building MCP servers, they’ve collected some.

- Danger of MCP: Supabase leak - … although you have to be careful which one to use.

Discussion about AI, less technical

- My AI Skeptic Friends Are All Nuts

- Using AI for seasoned developers

- Why agents are bad pair programmer

- Writing code was never the bottleneck

- Uber’s reversed approach: using AI to review code

- Six months in AI with pelicans

- Karpathy: Software in the era of AI

-

Software is changing, again. We’ve entered the era of “Software 3.0,” where natural language becomes the new programming interface and models do the rest.

-

He explores what this shift means for developers, users, and the design of software itself— that we’re not just using new tools, but building a new kind of computer.

-

- On the road to production grade

- Prompt Injection Design Patterns - I’m very interested to see this new trend of people trying to build secure GenAI applications.

- GenAI Patterns (Martin Fowler) - Simiarly, some GenAI patterns to use.

- The lethal trifecta for AI agents: private data, untrusted content, and external communication

-

If you are a user of LLM systems that use tools (you can call them “AI agents” if you like) it is critically important that you understand the risk of combining tools with the following three characteristics. Failing to understand this can let an attacker steal your data.

-

- It is time to take agentic workflows for data work seriously - Based on a demo from one of my data engineering colleagues using MCPs, I have to agree.

- Agentic coding in Analytics Engineering

- Dlt - Great ingestion tool for simple stuff. If you haven’t heard of dlt yet, it’s like dbt but for the ingestion layer. (paraphrasing a comment on the tool)

- Python ELT with dlt workshop

- uv is the best thing to happen to the Python ecosystem - I agree.

-

It’s 2025. Does installing Python, managing virtual environments, and synchronizing dependencies between your colleagues really have to be so difficult? Well… no! A brilliant new tool called uv came out recently that revolutionizes how easy installing and using Python can be.

-

Misc

- The data news newsletter by Christopher Blefari released a very similar collection of the most important articles of the past 6 months a couple days ago. There was significant overlap between my links and his, but I included a few of his extra links that I particularly liked. Not all, so if you want a different perspective, definitely check out the article.

- Salesforce acquires Informatica

- https://www.whoisthebestcdo.com - A game to play if you’re curious how a CDO’s day might look like. How long can you survive?

- Reddit sues anthropic

- Ancestry’s optimization of a 100 billion row Iceberg table

- Elixir and the Super Bowl - Would you have guessed that Elixir is used to make the Super Bowl better?

- Train station proximity and kebab quality analysis

- Database Trends from HN articles - Barely any enterprise mention (obvious HN bias) eg MSSQL, Oracle, Databricks

- IO devices and latency

- A guide to AI agents for data engineer

- Meta’s new smart glasses demo - Probably my favourite scene from a live demo, maybe ever. After failing live to showcase a feature of the product Zuckerberg came up with some very unique excuses:

- First fail: “It’s a skill issue”

- Second time: “Wifi must be bad”

- Python documentary - Great, quality documentary.

-

“This 90-minute documentary features Guido van Rossum, Travis Oliphant, Barry Warsaw, and many more, and they tell the story of Python’s rise, its community-driven evolution, the conflicts that almost tore it apart, and the language’s impact on… well… everything.”

-

- AI and the change of the hiring process (part 1 of the article) and another article on similar topic and The state of the SWE job market in 2025. - I remember listening to a podcast about a year ago mentioning some of these AI-assisted interviewing tools, thinking it might impact the interviewing process. Looks like it did just that.

-

“Young people are using ChatGPT to write their applications; HR is using AI to read them; no one is getting hired”.

-

- How tech companies measure the impact of AI on software development

- Visualize SQL with ChatGPT - A neat example of something I would’ve never thought of.

- Data center investment boom

-

“[…] staggering data-centre deals unveiled by firms such as OpenAI, Nvidia and Oracle, is aimed at increasing the computing power its protagonists believe is needed to supply generative AI.”

-

- Deloitte to pay money back to Albanese government after using AI in $440,000 report - A good reminder that AI isn’t always the solution.

-

Deloitte will provide a partial refund to the federal government over a $440,000 report that contained several errors, after admitting it used generative artificial intelligence to help produce it.

-

- Did you ever get hacked by a job interview? - This person came close. He decided to test the code with AI just before running it, and it uncovered nasty, obfuscated code inside the project.

- SQL anti patterns refresher - A list of some common SQL errors like

- Mishandling Excessive Case When Statements

- Using Functions on Indexed Columns

- Using SELECT * In Views

- Overusing DISTINCT to “Fix” Duplicates*

- DuckLake by MotherDuck, ducklake Enters the Lakehouse Race - Very interesting idea for 2 reasons: it comes from the MotherDuck team and it seems pretty obvious yet no one has done it before.

-

This my friends, is what it comes down too. DuckLake is just another Lake House format with parquet storage on the backend. Nothing new there. It’s the catalog and metadata management that DuckDB recognized has been a huge frustration for Lake House users.

-

- 10$ a month data lakehouse - a neat idea and some interesting solution in order to cut costs

- Zalando used AI to summarize and help understand post mortems

- How people use chatgpt

- CloudFlare’s data platform

My short takeaway

Teams are getting real value from AI, but everyone is still figuring out how to adapt to a rapidly changing field. MCP might also be more consequential than most people realize.